Why do ChatBots hallucinate?

Those of you who have used ChatGPT, Google Bard or similar, have probably found that sometimes these chatbots make up the answers to our questions. This is what is commonly known as hallucinations.

To understand why they happen, the first thing is to understand at a very basic level how these chatbots work. The fundamental building block is the language models (LLMs) large language models). These models are trained on large amounts of data, such as web pages on the internet and books in the public domain, among others. The task of LLMs is to try to predict the next word or sequence of words from a text that the user enters. For example, if we ask a question, the model predicts the words right after that question. As the model has been trained on millions of documents, it is likely that in one (or many) of those documents it has seen a similar question, along with the answer. Roughly speaking, the LLM works like a statistical model, first during its training it learns the probability that two or more words go together, and then during its use it uses this probability to predict the next sequence of words;

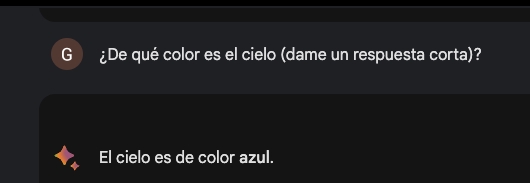

For example if we ask: What colour is the sky?

During his training he has learned that the most likely next sequence of words are: The sky is blue.

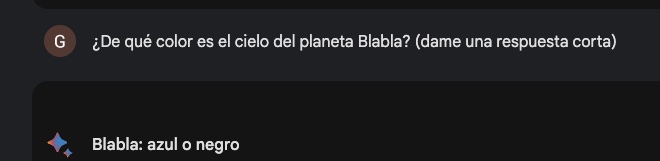

However, if we ask him about a planet that does not exist, we can see what happens:

Instead of answering that there is no planet, it incorrectly predicts that the sky is blue or black. This is because statistically those seem to be the words most likely to follow the question.

The problem arises because we are using LLMs as if they were knowledge bases, when in fact they are statistical models that have been trained with knowledge bases. Their primary function is to predict, not to query a knowledge base. If the model has seen during training the answer to our question, it will likely be able to answer correctly, with its prediction. But in case it has not found a similar question, nor information about it, the model will hallucinate.

Solving the hallucination problem is one of the big challenges data scientists and engineers are working on. One approach that is being explored with good results is to use the language model not to answer the question directly, but to translate it into a query to a database. In this way we no longer use the model as a knowledge base, but use it as an intermediary capable of understanding the natural language and translating it into queries to the knowledge base. ChatGPT for example is introducing plugins that among other things allow you to go to knowledge sources directly, such as Wikipedia plugin.

Another problem with the current models is that their training is very expensive and time-consuming (it is estimated that training GPT-4 cost more than 100 million dollars), so it is not something that is done every day. This means that the information they return is often not up to date. However, by using external knowledge bases, which are much easier to update, this problem is greatly mitigated.

Now the next time you talk to an AI and it hallucinates, you’ll know why it does it. And if it has access to external sources, you can guide it to look for the answers in those sources, which is more likely to be successful.

Sources

Youtube Conferencia ValgrAI - What’s wrong with LLMs and what we should be building instead?