Guide to robust development with AI: Cursor and Github Copilot

AI code assistants have recently spread due to the power they offer to speed up software development. However, learning to use them is not as simple as writing a simple prompt. The learning curve to fully take advantage of what they offer is not as small as we might think. If we underestimate it, we risk a) producing poor quality code with errors and security flaws or b) making limited use of the capabilities and obtaining fewer benefits than those available.

This post aims to be a guide for programmers who want to start with AI code assistants, or those who have already started but want to make more use of them. Although we talk about Cursor and VSCode Copilot in the title, it is actually applicable to most assistants on the market. We will begin with some principles that we consider key to programming with assistants, and then talk about practices and use cases.

Principles for developing with AI assistants

Since assistants are such a powerful tool, capable of generating large amounts of code for us, we believe it is essential to be clear about some principles that guide our use at all times. The goal is to be able to produce code with them while increasing the quality standard. We do not believe in approaches where speed is prioritized and important aspects such as the quality and security of the generated code are overlooked.

Principle 1 - Review: Current code assistants frequently make mistakes, so we must ALWAYS carefully review the generated code.

Principle 2 - Ownership: The code generated by AI is our code in all respects and we are the sole responsible.

Principle 3 - Experimentation: The same prompt may work in some cases and not in others; an initial invalid response doesn’t mean AI cannot help us. It’s advisable to retry by changing the prompt: adding context, giving more detailed instructions, etc… An experimental attitude will help us learn to make the most of it in our particular context.

Principle 4 - Context: The quality and suitability of the response will largely depend on the context the assistant has. An adequate context should contain the necessary source code to make the change along with instructions and rules that guide how it should be done. Additionally, in certain situations, providing project documentation may be necessary.

Recommended Usage Practices

Review development best practices: Code reviews, automatic tests, the use of linters, etc.. are more necessary than ever, since incorporating AI into your team is equivalent to scaling the team; the more you produce, the higher the risk of introducing errors or technical debt.

Plan before starting: By default, assistants are set to jump straight to the solution and deliver the code, then explain it to you. However, when we want to make changes that require some complexity, it’s advisable to first ask it to design the approach and plan to make the changes. This way, we can review the approach, suggest changes, or discard it.

Divide and conquer: Just like when tackling a large task or user story, breaking it down into smaller subtasks is beneficial. When using an assistant, asking it to perform small steps allows us to move forward more confidently. Ideally, request a small task → review and adjust the code → test it → repeat the loop.

In case of errors retry with another prompt or model: As we mentioned before, AI makes mistakes. However, if given an incorrect response to a prompt, that doesn’t mean it can’t be used in that case. In these instances, it’s useful to understand where the error comes from and retry. Examples:

Lack of context -> Reference source code files or the complete code. Generate documentation files by modules to provide them to the AI, e.g.: document with the data model.

Lack of specification -> Add instructions detailing how we want changes to be made. Definition of rule files.

Lack of capacity due to complexity -> Break down into smaller and simpler tasks. Use a more advanced model with reasoning. Example: Gemini 2.5 pro, Claude 3.7 Sonnet Thinking, o3, …

Lack of capacity due to lack of data -> For example, if we ask for something very niche that the model has not “seen” before. In that case, it’s best not to use AI and revert to the traditional method.

Define project rules: Cursor and VSCode allow you to define rules and include them in your repositories to save you from writing them repeatedly. These rules enable you to achieve consistency and alignment with your context, in the AI responses. You can define the style of how you want the answers and the code generated by the assistant. For example, in a rule, you can define that Tailwind library be always used for Frontend styling or camel case for _naming.

_Choose when to use it and when not to: With practice, one learns for which tasks it is useful to use AI and for which it is counterproductive. As a general rule, if the task is complex or highly dependent on our context, it seems preferable to start without AI and use it in a limited way for more specific and simple tasks. For simpler and repetitive tasks, AI will indeed save us a lot of time.

Create a forum to share experiences internally: No one better than other colleagues who share projects and context to share their experience and learn what works best. A slack channel, a wiki, or a shared document where experiences are recorded can accelerate collective learning and identification of best practices for your specific context.

Use cases

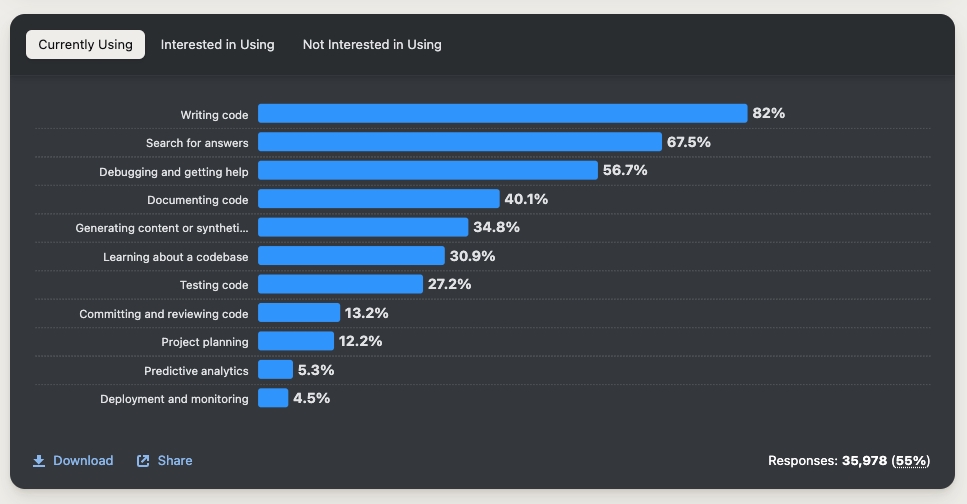

Generative AI assistants integrated into the IDE support many more use cases than code writing. In the image, we can see a Stackoverflow report on the use of AI in 2024, where it’s evident how developers are utilizing the technology.

Information search. Allows us to ask questions directly and prevents us from having to exit the IDE to open a browser and search on Google.

Code review. Request it to review the code for errors, issues, and security risks; a reference guide can be provided so it knows how to conduct the review.

Create tests. If we have code without tests, we can ask it to create unit tests for us. It is recommended to give it a reference like another test file, to create consistent tests. It can also be used for TDD, where it is asked to start with the tests and we use them to design the functionality.

Debug. If we paste the error trace and provide the code context, it can debug and propose solutions that are often correct. It’s also very useful if we ask it to add traces and logs to the code to help us debug.

Refactoring. Given existing code, it can be asked to help refactor following a guide for good practices and style.

Understand legacy code. Request it to explain, generate diagrams, ask questions, etc…

Documenting. It’s very effective for creating a structure and skeleton, saving us from starting with a blank page. We will likely need to review and change things. We can provide templates for structuring the information.

Brainstorming and planning. When we need to tackle a new project or task and want to explore options, the assistant can be asked to help and suggest several ways to approach it. One advantage LLMs have is generating many options, as they have been trained with vast amounts of data.

Specifications. If the task isn’t sufficiently specified, the assistant can be used to suggest a specification, serving as a base for refining and obtaining the desired specification.

Evaluate technical decisions. When choosing between various technologies or solutions for a problem, AI can help by listing the pros and cons of each, and zoom in on the points we want to make a more informed decision.

Automate tasks in the local environment. It works very well for creating scripts that automate local work.

Migration to other technologies and languages. For example, migrating a project from Java 8 to Java 24, or migrating the ORM we use.

Deployment and integration automation (CI/CD pipelines). Especially when we start a new project, it allows us to create pipelines for the platform we have in a very short time.

Adapt the code to make it accessible. It is usually a somewhat tedious but very necessary task, which is not usually overly complex, making it an ideal use case for using AI.

Log and large data set analysis. Especially when we work on investigating issues in production, AI can greatly help us analyze data and find patterns. However, instead of passing a log to the AI to extract errors, the ideal approach is to have it construct a script to do so. This approach will yield much better results and prevent us from having to share production data with the AI.

TL:DR

Generative AI assistants in development are here to stay, so it is essential to learn how to make the most of them to accelerate development and increase software quality. If you have to take away just a few ideas from this document, they are:

(Quality) Focus on good development practices to ensure quality and security.

(Review) Review ALL generated code.

(Ownership) The code generated by the AI is still YOUR responsibility.

(Divide and conquer) Give preference to small iterations: prompt -> review and adjustment -> testing.

(Criterion) Decide when to use it and when not to.

(Shared learning) Create forums to share knowledge within the company.

As a Fractional CTO, I have helped several companies adopt generative AI as part of their development processes, so I know how to define and execute a strategy that maximizes the value of these tools while limiting risks. If you are considering adopting these assistants and want to do so in a robust way with guarantees, I can help you.